By Adedeji Adewumi

In the aftermath of the Yelewata massacre, as charred homes still smouldered and families buried their dead, one thing became painfully clear: we are fighting the wrong kind of war with the wrong set of tools. What is unfolding across the Middle Belt is not communal unrest, nor is it merely a farmer–herder dispute. It is asymmetric warfare—strategic, persistent, and fuelled by our failure to adapt. These attackers move under darkness, strike swiftly, and vanish before dawn. They understand our blind spots—in surveillance, response time, and imagination.

You don’t counter that with battalions arriving 48 hours too late. You counter it with intelligence. And in today’s world, that means artificial intelligence.

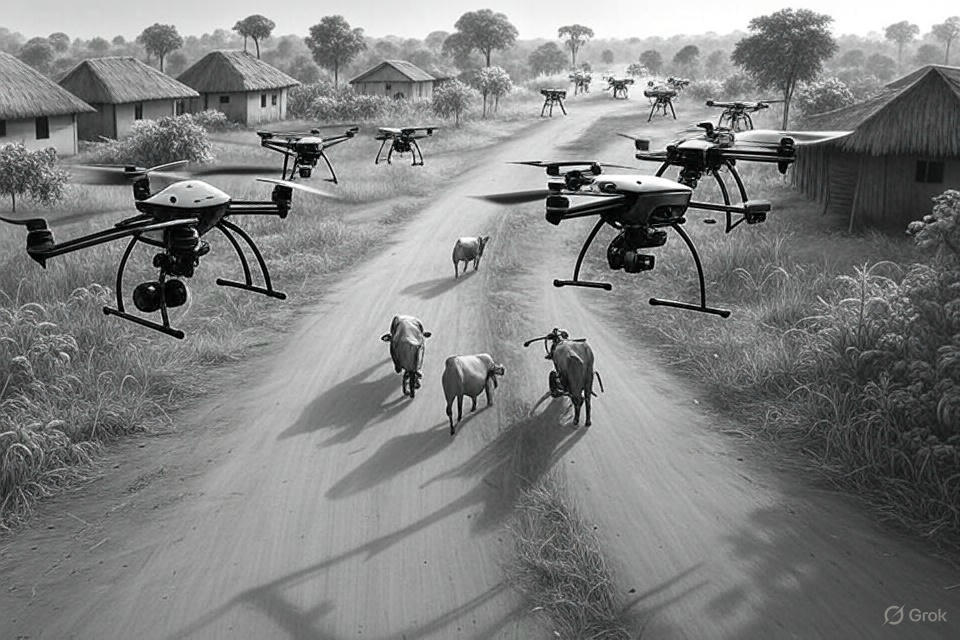

From multiple intelligence reports, local informants, and conversations with civil society actors operating in the Middle Belt, patterns emerge. Entry points used by armed groups—often footpaths winding through low-density bush—remain invisible to conventional patrols. However, to an AI-powered drone trained to detect heat signatures or abnormal movement, these routes light up like runways. In Guma, Logo, and parts of Gwer West, communities consistently report strange movements, unfamiliar faces, and silent convoys of motorcycles appearing days before attacks. These are signals—missed only because we lack the systems to detect and process them.

Globally, AI is already shaping the future of internal defence. Platforms like Palantir’s Gotham have helped US forces in Iraq and Afghanistan identify insurgent activity by fusing satellite imagery, drone feeds, intercepted calls, and human intelligence into a single, predictive dashboard. In Ukraine, this same technology is being used to track and pre-empt Russian military action in real time.

In countries like Israel, Estonia, and Rwanda, AI-enabled defence systems are being used to monitor border zones and rural corridors. They detect irregular activity and alert security operatives long before traditional surveillance would. These systems don’t just collect data—they learn. They adapt to the terrain, refine their predictions, and evolve with every new data point.

Nigeria can build similar capabilities.

Deploying a network of AI-powered drones and sensors across flashpoint corridors in Nasarawa, Benue, Plateau, and Taraba would give us a fighting chance. These tools can be trained to detect abnormal clustering of motorbikes, cattle movement patterns that suggest cover for militant activity, or the sudden emergence of foot traffic in uninhabited areas. With real-time transmission to command centres in Makurdi and Lafia, this intelligence can inform strategic intervention before violence erupts.

But the promise of AI in this war goes beyond what we can see. It includes what we can hear. One of the consistent themes emerging from local engagements is the way tension builds before attacks—through whispers in markets, anonymous voice notes, and coded language on community radio. Natural Language Processing (NLP)—an arm of AI already deployed globally to monitor extremist content—can help detect and interpret these signals. Trained in local dialects and contextualised for Nigeria’s unique patterns of speech, these models can flag early signs of mobilisation, hate speech, and misinformation.

In fact, following several recent attacks, misinformation spread like wildfire—false images, inflammatory messages, and threats of reprisal. These not only deepen trauma but also incite further violence. AI models trained to detect disinformation patterns could help defuse this cycle before it spirals out of control.

Some argue this technology is too advanced for Nigeria. I disagree. Rwanda already uses AI-driven drones for security and agricultural monitoring. Estonia—a country smaller than Lagos—relies on decentralised, real-time defence systems powered by data. Nigeria has the talent. Our universities and tech hubs are producing AI engineers and data scientists at scale. What we lack is institutional urgency and coordinated investment in homegrown solutions.

Importantly, AI is not a substitute for local vigilance. It is a force multiplier. In Gwer West, for example, local vigilantes use a basic SMS alert chain to warn neighbouring communities. With AI layered in, that chain could become an intelligence web, verifying threats in seconds, ranking urgency, and alerting security forces in real time.

This is what non-kinetic warfare looks like. The objective is not to outgun the enemy, but to outthink them. It is to disrupt coordination, harden targets, and impose a high cost on attempts to destabilise communities.

Of course, safeguards are needed. AI systems must be governed, transparent, and community-informed. They must not be used for political surveillance or to violate civil liberties. But these concerns are manageable—and far less grave than the consequences of doing nothing.

This is not a call for another round of motorcycles for local police or a fresh deployment of fatigued troops. It is a call for a shift in thinking—the kind that recognises that modern wars are not always fought on frontlines—they’re fought in data streams, anticipatory systems, and the ability to respond faster than your adversary can move.

The Middle Belt is not short of courage. It is short of the capacity to predict, prevent, and protect. That capacity will not come from repeating the past; it will come from investing in the future.

We’ve tried more guns. We’ve tried more soldiers. It’s time we tried something else. It’s time we fought these ghosts with code.

Adedeji Adewumi is the founder of 52, an advisory firm working at the intersection of policy, communications, and governance.